DEEP – Data Efficiency in Embedded Processors

- Contact:

Prof. Dr. Torben Ferber (Consortium Coordinator)

- Project group:

Prof. Becker

- Funding:

German Federal Ministry of Education and Research (BMBF), ErUM-Data Action Plan, Call of 11 October 2024 – Software and Algorithms with a Focus on Artificial Intelligence and Machine Learning

- Partner:

Karlsruhe Institute of Technology (KIT/ETP, KIT/ITIV), University of Bonn, University of Freiburg, Hamburg University of Technology, SiMa Technologies Germany GmbH. Associated Partners: SICK AG, DESY (MSK)

- Startdate:

01.10.2025

- Enddate:

30.09.2028

Empowering Data Efficiency In Embedded Processors with Artificial Intelligence (DEEP)

Motivation

Our large research infrastructures generate immense and constantly growing amounts of data. For example, with the transition to the High-Luminosity Large Hadron Collider (HL-LHC) at CERN, data production will increase tenfold, which cannot be handled with existing technology in an environmentally sustainable way. This data deluge is a general societal issue of our information era. While modern machine learning (ML) methods have led to major advances in fundamental research in recent years, this has mainly concerned the analysis of previously stored data on conventional computing architectures (CPUs, GPUs). By integrating ML algorithms into embedded processors near the sensors, the need for data transmission and storage can be significantly reduced already at the source. However, programming such systems, particularly FPGAs and heterogeneous Systems-on-Chip (SoCs), requires specialized knowledge that often exceeds the typical training period of PhD students – posing a significant barrier for many research projects.

Project goals

DEEP (Data Efficiency in Embedded Processors) serves as a virtual national competence center for heterogeneous SoCs in ErUM, addressing the challenges of digitization in research on the Universe and Matter. The main goals are:

- Development of software tools and an HLS framework for implementing ML algorithms on heterogeneous SoCs (AMD Versal, SiMa.ai)

- Building world-leading expertise for next-generation heterogeneous SoCs in Germany

- Deploying ultra-fast ML methods at ErUM research infrastructures for accelerator control, hardware triggers, and calibration

- Reducing vendor dependency by investigating multiple SoC platforms

- Knowledge and technology transfer to the ErUM community and industry through open-source tools, workshops, and training

Realization of the project

The project is divided into three work packages (WP):

- WP1 – Cooperation: Combining, advancing, and disseminating common know-how. Consortium organization, internal qualification, national and international cooperation (DRD7, FastML), and community training through workshops co-organized with the ErUM-Data Hub.

- WP2 – Software and Tools: Facilitating ML implementation on heterogeneous SoCs. Algorithm optimization, development of toolchains for AMD Versal FPGAs and AI-Engines based on MLIR and HLS, overarching methodology for workload partitioning across heterogeneous architectures, and adaptation of the SiMa.ai toolchain for real-time applications.

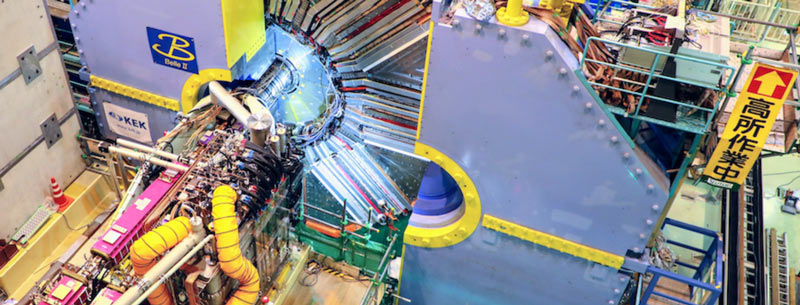

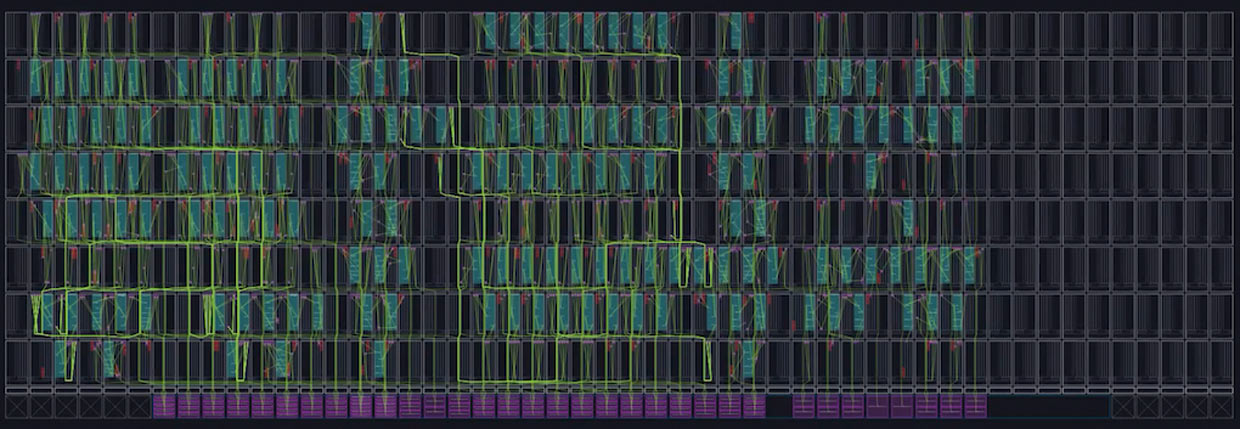

- WP3 – Algorithms and Applications: Development and implementation of ML algorithms at ErUM research infrastructures. Pilot applications include: real-time GNN-based track reconstruction at Belle II and AMBER, alignment algorithms on heterogeneous architectures for LHCb, space-time clustering for neutron detectors (ESS/FRM II), anomaly classification for European XFEL cavities, real-time machine emulator for PETRA III/IV, and technology transfer to industry partners.

- Participating research infrastructures: Belle II@SuperKEKB (KEK), LHCb@LHC and AMBER@SPS (CERN), PETRA III/IV and European XFEL (DESY), Heinz Maier-Leibnitz (FRM II) and ESS.

ITIV participation

The research group of Prof. Becker at the Institute for Information Processing Technology (ITIV) brings extensive experience in the design and application of reconfigurable hardware. Key areas include architecture design for embedded systems, hardware/software co-design, and application-specific architectures. ITIV is involved in several central tasks:

- WP2.T2: Development of the toolchain for AMD Versal FPGAs, including an HLS kernel library and a novel floor-planning concept

- WP2.T3: Toolchain for AMD Versal AI-Engines, in particular partitioning of ML models onto the AI-Engine array architecture

- WP2.T4: Overarching methodology and partitioning – merging the FPGA and AI-Engine toolchains into a common workflow with automated workload distribution

- WP3.T1: Implementation of real-time track reconstruction using Graph Neural Networks for Belle II on AMD Versal

- WP3.T7: Transfer of developed ML deployment solutions to industry applications

The group already collaborates with Prof. Ferber's group on the scalability of dynamic Graph Neural Networks on AMD Versal and jointly supervises MSc and PhD students.