Compiler-Aware Spiking Neural Architecture Search

- Typ:Bachelor / Master thesis

- Datum:01 / 2026

- Betreuung:

Compiler-Aware NAS

Description

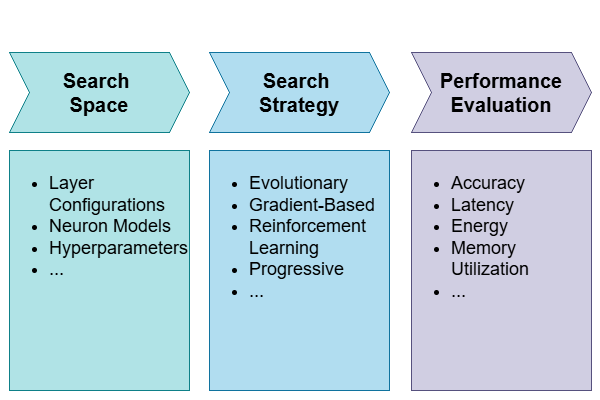

Spiking Neural Networks (SNNs) are bio-inspired neural networks that promise to be more energy-efficient compared to traditional ANNs, though often inferior in task performance. While Neural Architecture Search (NAS) automatically optimizes SNN topology for accuracy, deploying these models efficiently in resourceconstrained environments requires integrating hardware considerations. Previous Hardware-Aware NAS primarily focused on area, power or storage, however, metrics critical for compiler mapping and execution efficiency (e.g., data reuse, memory tiling) are frequently overlooked. This thesis aims to address this gap by integrating compiler-estimated metrics (e.g. derived from simulation) directly into the SNN-NAS objective function to guide the design toward optimal deployability. Different focus can be set.

Requirements

- Good Knowledge of Python and ML-Frameworks (PyTorch, Optuna)

- Good Knowledge of Neural Networks

References

- Rathi et al._ „Exploring Neuromorphic Computing Based on Spiking Neural Networks: Algorithms to Hardware“

- Song et al. „Compiling Spiking Neural Networks to Neuromorphic Hardware“

- Kartashov et al. "SpikeFit: Towards Optimal Deployment of Spiking Networks on Neuromorphic Hardware"

- Svoboda & Adegbija "Spiking Neural Network Architecture Search: A Survey"

- Na et al. "AutoSNN: Towards Energy-Efficient Spiking Neural Networks"

- Boyle et al. "SANA-FE: Simulating Advanced Neuromorphic Architectures for Fast Exploration".