Feature selection and model compression: fast, efficient and explainable machine learning

- Type:Bachelor-/ Masterarbeit

- Date:ab 03 / 2023

- Tutor:

Feature selection and model compression: fast, efficient and explainable machine learning

Background

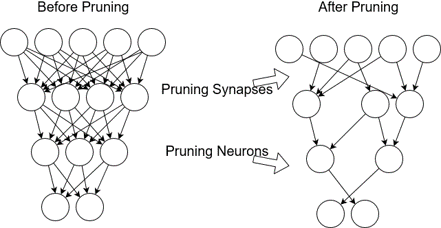

In the machine learning community, dealing with datasets which contain tens of thousands of features is not uncommon anymore. Using deeper and larger neural networks is kind of rule of thumb in such cases and it does bring a lot of benefits. However, it increases both hardware and time resources needed to train the DNNs and it is very hard to explain why and how that works. Thus, selecting a small subset of features while minimizing the generalization error is a crucial focus in the last decades, along with model compression for an efficient computation especially on low-cost devices.

Tasks

Under this topic you will have the chance to develop an existing state-of-the-art feature selection method or use this approach to further develop model compression algorithms. There are plenty of possibilities to extend previous work and challenge other cutting-edge methods.

Following (but not limited to) points could be the research topics:

- Fast feature selection using GPU acceleration

- Feature selection for regression problems

- Adaptive model pruning via feature selection

- Explainable DNN model and model visualization

- Advanced dropout for DNN training

- ...

Required skills

- High motivation to learn new technologies

- Skills in programming (Python, C++, ...)

- Experience in machine learning field is a plus